ServerHandler.ServeHTTP: handler.ServeHTTP(rw, req)Ĭ:/go/src/net/http/server.go:1801 (0圆95503) The architectural changes Amazon made to Redshift make it better able to handle large volumes of data for analytical queries. T=T12:14:34-0600 lvl=dbug msg=getEngine logger=tsdb.postgres connection=" lvl=eror msg=“Request error” logger=context userId=1 orgId=1 uname=admin error=“runtime error: invalid memory address or nil pointer dereference” stack="C:/go/src/runtime/panic.go:491 (0x42f510)

AWS REDSHIFT POSTGRES CODE

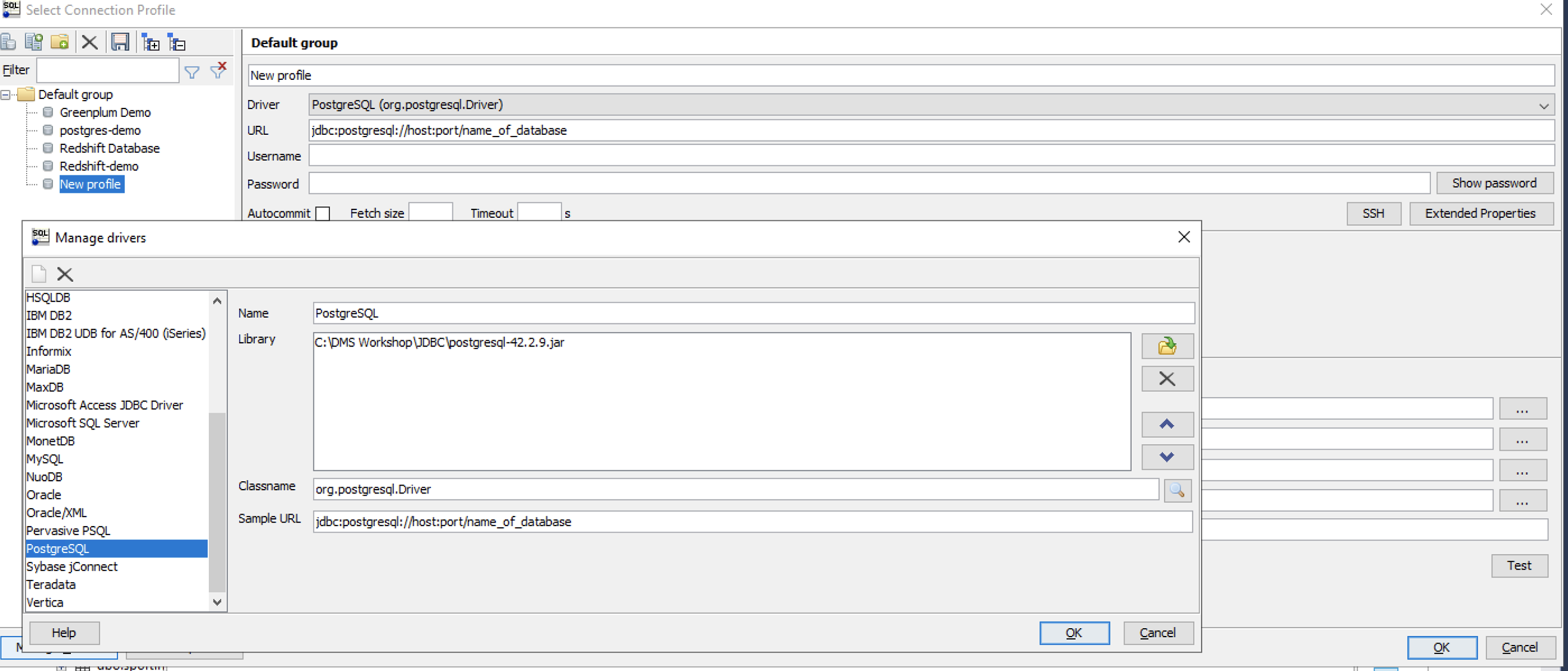

I get the connection information from the jdbc string:Įven if this references the redshift protocol ( jdbc:redshift://.:5439/dev), I can use the postgresql one, because AWS forked the PostgreSQL open-source code to build proprietary Redshift database: I have created a Redshift database from the AWS console: Integrate.io does all the hard work for you when migrating from Postgres to Redshift. Here is an example where I query a datawarehouse on Amazon Redshift.

You may want to transfer data from it to YugabyteDB, or query it from there. You may have data on another PostgreSQL compatible database. For more information about these types, see Special Character Types in the PostgreSQL documentation. 'char' A single-byte internal type (where the data type named char is enclosed in quotation marks). Having this available in YugabyteDB, which is mainly optimized for OLTP, opens many possibilities. These PostgreSQL data types are not supported in Amazon Redshift. The PostgreSQL Foreign Data Wrapper has some limitations, so better check the execution plan before executing a complex query. In case of doubt, it is easy to copy/paste the "Remote SQL" to explain it on the remote database (here in Dbeaver): Setup the database connection by specifying RDS instance ID (the instance ID is in your URL, e.g. In the Data Pipeline interface: Create a data node of the type SqlDataNode. Thanks to the predicate push-down the number of rows returned were only 2, this in 57 milliseconds. Amazon Redshift is a fast, fully managed, petabyte-scale data warehouse solution that uses columnar storage to minimize IO, provides high data compression. Nowadays you can define a copy-activity to extract data from a Postgres RDS instance into S3. Enter fullscreen mode Exit fullscreen mode

0 kommentar(er)

0 kommentar(er)